Reading List

The most recent articles from a list of feeds I subscribe to.

The Mobile Performance Inequality Gap, 2021

TL;DR: A lot has changed since 2017 when we last estimated a global baseline resource budget of 130-170KiB per-page. Thanks to progress in networks and browsers (but not devices), a more generous global budget cap has emerged for sites constructed the "modern" way. We can now afford ~100KiB of HTML/CSS/fonts and ~300-350KiB of JS (gzipped). This rule-of-thumb limit should hold for at least a year or two. As always, the devil's in the footnotes, but the top-line is unchanged: when we construct the digital world to the limits of the best devices, we build a less usable one for 80+% of the world's users.

Way back in 2016, I tried to raise the alarm about the causes and effects of terrible performance for most users of sites built with popular frontend tools. The negative effects were particularly pronounced in the fastest-growing device segment: low-end to mid-range Android phones.

Bad individual experiences can colour expectations of the entire ecosystem. Your company's poor site performance can manifest as lower engagement, higher bounce rates, or a reduction in conversions. While this local story is important, it isn't the whole picture. If a large enough proportion of sites behave poorly, performance hysteresis may colour user views of all web experiences.

Unless a site is launched from the home screen as a PWA, sites are co-mingled. Pages are experienced as a series of taps, flowing effortlessly across sites; a river of links. A bad experience in the flow is a bad experience of the flow, with constituent parts blending together.1

If tapping links tends to feel bad...why keep tapping? It's not as though slow websites are the only way to access information. Plenty of native apps are happy to aggregate content and serve it up in a reliably fast package, given half a chance. The consistency of those walled gardens is a large part of what the mobile web is up against — and losing to.

Poor performance of sites that link to and from yours negatively impacts engagement on your site, even if it is consistently snappy. Live by the link, die by the link.

The harmful business impact of poor performance is constantly re-validated. Big decreases in performance predictably lead to (somewhat lower) decreases in user engagement and conversion. The scale of the effect can be deeply situational or hard to suss out without solid metrics, but it's there.

Variance contributes another layer of concern; high variability in responsiveness may create effects that perceptually dominate averages, or even medians. If 9 taps in 10 respond in 100ms, but every 10th takes a full second, what happens to user confidence and engagement? These deep-wetware effects and their cross-origin implications mean that your site's success is, partially, a function of the health of the commons.

From this perspective, it's helpful to consider what it might take to set baselines that can help ensure minimum quality across link taps, so in 2017 I followed up with a post sketching a rubric for thinking about a global baseline.

The punchline:

The default global baseline is a ~$200 Android device on a 400Kbps link with a 400ms round-trip-time ("RTT"). This translates into a budget of ~130-170KB of critical-path resources, depending on composition — the more JS you include, the smaller the bundle must be.

A $200USD device at the time featured 4-8 (slow, in-order, low-cache) cores, ~2GiB of RAM, and pokey MLC NAND flash storage. The Moto G4, for example.

The 2017 baseline represented a conservative, but evidence-driven, interpretation of the best information I could get regarding network performance, along with trend lines regarding device shipment volumes and price points.2 Getting accurate global information that isn't artificially reduced to averages remains an ongoing challenge. Performance work often focuses on high percentile users (the slowest), after all.

Since then, the metrics conversation has moved forward significantly, culminating in Core Web Vitals, reported via the Chrome User Experience Report to reflect the real-world experiences of users.

Devices and networks have evolved too:

An update on mobile CPUs and the Performance Inequality Gap:

Mid-tier Android devices (~$300) now get the single-core performance of a 2014 iPhone and the multi-core perf of a 2015 iPhone.

The cheapest (high volume) Androids perform like 2012/2013 iPhones, respectively. twitter.com/slightlylate/status/1139684093602349056

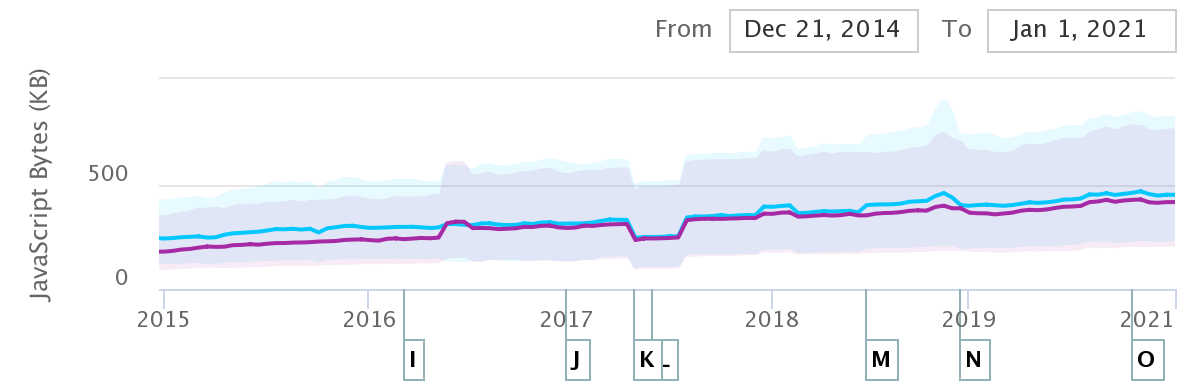

Meanwhile, developer behaviour offers little hope:

A silver lining on this dark cloud is that mobile JavaScript payload growth paused in 2020. How soon will low-end and middle-tier phones be able to handle such chonky payloads? If the median site continued to send 3x the recommended amount of script, when would the web start to feel usable on most of the world's devices?

Here begins our 2021 adventure.

Hard Reset

To update our global baseline from 2017, we want to update our priors on a few dimensions:

- The evolved device landscape

- Modern network performance and availability

- Advances in browser content processing

Content Is Dead, Long Live Content

Good news has consistently come from the steady pace of browser progress. The single largest improvements visible in traces come from improved parsing and off-thread compilation of JavaScript. This step-change, along with improvements to streaming compilation, has helped to ensure that users are less likely to notice the unreasonably-sized JS payloads that "modern" toolchains generate more often than not. Better use of more cores (moving compilation off-thread) has given sites that provide HTML and CSS content a fighting chance of remaining responsive, even when saddled with staggering JS burdens.

A steady drumbeat of improvements have contributed to reduced runtime costs, too, though I fear they most often create an induced demand effect.

The residual main-thread compilation, allocation, and script-driven DOM/Layout tasks pose a challenge for delivering a good user experinece.3 As we'll see below, CPUs are not improving fast enough to cope with frontend engineers' rosy resource assumptions. If there is unambiguously good news on the tooling front, multiple popular tools now include options to prevent sending first-party JS in the first place (Next.js, Gatsby), though the JS community remains in stubborn denial about the costs of client-side script. Hopefully, toolchain progress of this sort can provide a more accessible bridge as we transition costs to a reduced-script-emissions world.

4G Is A Miracle, 5G Is A Mirage

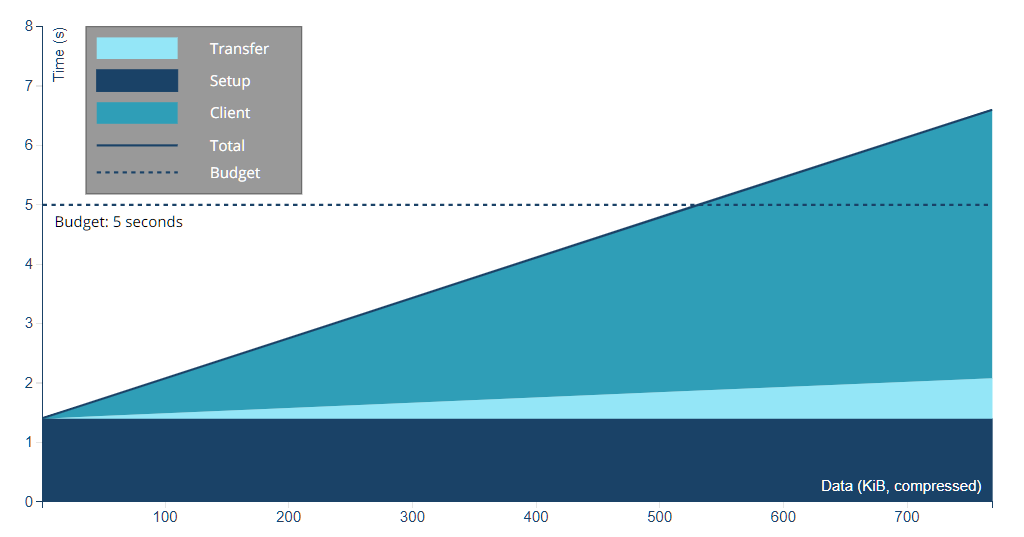

The 2017 baseline post included a small model for thinking about how to think about how various factors of a page's construction influence the likelihood of hitting a 5-second first load goal.

The hard floor of that model (~1.6s) came from the contributions DNS, TCP/IP, and TLS connection setup over a then-reasonable 3G network baseline, leaving only 3400ms to work with, fighting Nagle and weak CPUs the whole way. Adding just one extra connection to a CDN for a critical path resource could sink the entire enterprise. Talk about a hard target.

Four years later, has anything changed? I'm happy to report that it has. Not as much as we'd like, of course, but the worldwide baseline has changed enormously. How? Why?

India has been the epicentre of smartphone growth in recent years, owing to the sheer size of its market and an accelerating shift away from feature phones which made up the majority of Indian mobile devices until as late as 2019. Continued projections of double-digit market growth for smartphones, on top of a doubling of shipments in the past 5 years, paint a vivid picture.

Key to this growth is the effect of Reliance Jio's entry into the carrier market. Before Jio's disruptive pricing and aggressive rollout, data services in India were among the most expensive in the world relative to income and heavily reliant on 3G outside wealthier "tier 1" cities. Worse, 3G service often performed like 2G in other markets, thanks to under-provisioning and throttling by incumbent carriers.

In 2016, Jio swept over the subcontinent like a monsoon dropping a torrent of 4G infrastructure and free data rather than rain.

Competing carriers responded instantly, dropping prices aggressively, leading to reductions in per-packet prices approaching 95%.

Jio's blanket 4G rollout blitzkrieg shocked incumbents with a superior product at an unheard-of price, forcing the entire market to improve data rates and coverage. India became a 4G-centric market sometime in 2018.

If there's a bright spot in our construction of a 2021 baseline for performance, this is it. We can finally upgrade our assumptions about the network to assume slow-ish 4G almost everywhere (pdf).

5G looks set to continue a bumpy rollout for the next half-decade. Carriers make different frequency band choices in different geographies, and 5G performance is heavily sensitive to mast density, which will add confusion for years to come. Suffice to say, 5G isn't here yet, even if wealthy users in a few geographies come to think of it as "normal" far ahead of worldwide deployment4.

Hardware Past As Performance Prologue

Whatever progress runtimes and networks have made in the past half-decade, browsers are stubbornly situated in the devices carried by real-world users, and the single most important thing to understand about the landscape of devices your sites will run on is that they are not new phones.

This makes some intuitive sense: smartphones are not in their first year (and haven't been for more than a dozen years), and most users do not replace their devices every year. Most smartphone sales today are replacements (that is, to users who have previously owned a smartphone), and the longevity of devices continues to rise.

The worldwide device replacement average is now 33 months. In markets near smartphone saturation, that means we can expect the median device to be nearly 18 months old. Newer devices continue to be faster for the same dollar spent. Assuming average selling prices (ASPs) remaining in a narrow year-on-year band in most geographies5, a good way to think of the "average phone" as being the average device sold 18 months ago. ASPs, however, have started to rise, making my prognostications from 2017 only technically correct:

The true median device from 2016 sold at about ~$200 unlocked. This year's median device is even cheaper, but their performance is roughly equivalent. Expect continued performance stasis at the median for the next few years. This is part of the reason I suggested the Moto G4 last year and recommend it or the Moto G5 Plus this year.

Median devices continue to be different from averages, but we can squint a little as we're abstracting over multi-year cohorts. The worldwide ASP 18 months ago was ~$300USD, so the average performance in the deployed fleet can be represented by a $300 device from mid-2019. The Moto G7 very much looks the part.

Compared to devices wealthy developers carry, the performance is night and (blinding) day. However shocking a 6x difference in single-thread CPU performance might be, it's nothing compared to where we should be setting the global baseline: the P75+ device. Using our little mental model for device age and replacement, we can naively estimate what the 75th percentile (or higher) device could be in terms of device price + age, either by tracking devices at half the ASP at half the replacement age, or by looking at ASP-priced devices 3/4 of the way through the replacement cycle. Today, either method returns a similar answer.

This is inexact for dozens of reasons, not least of all markets with lower ASPs not yet achieving smartphone saturation. Using a global ASP as a benchmark can further mislead thanks to the distorting effect of ultra-high-end prices rising while shipment volumes stagnate. It's hard to know which way these effects cut when combined, so we're going to make a further guess: we'll take half the average price and note how wrong this likely is6.

So what did $150USD fetch in 2019?

Say hello to the Moto E6! This 2GiB RAM, Android 9 stalwart features the all-too classic lines of a Quad-core A53 (1.4GHz, small mercies) CPU, tastefully presented in a charming 5.5" package.

If those specs sound eerily familiar, it's perhaps because they're identical to 2016's $200USD Moto G4, all the way down to the 2011-vintage 28nm SoC process node used to fab the chip's anemic, 2012-vintage A53 cores. There are differences, of course, but not where it counts.

You might recall the Moto G4 as a baseline recommendation from 2016 for forward-looking performance work. It's also the model we sent several dozen of to Pat Meenan — devices that power webpagetest.org/easy to this day. There was no way to know that the already-ageing in-order, near-zero-cache A53 + too-hot-to-frequency-scale 28nm process duo would continue to haunt us five year on. Today's P75 devices tell the story of the yawning Performance Inequality Gap: performance for those at the top end continues to accelerate away from the have-nots who are perpetually stuck with 2014's hardware.

The good news is that chip progress has begun to move, if glacially, in the past couple of years. 28nm chips are being supplanted at the sub-$200USD price-point by 14nm and even 11nm parts7. Specs are finally improving quickly at the bottom of the market now, with new ~$135USD devices sporting CPUs that should give last year's mid-range a run for its money.

Mind The Gap

Regardless, the overall story for hardware progress remains grim, particularly when we recall how long device replacement cycles are:

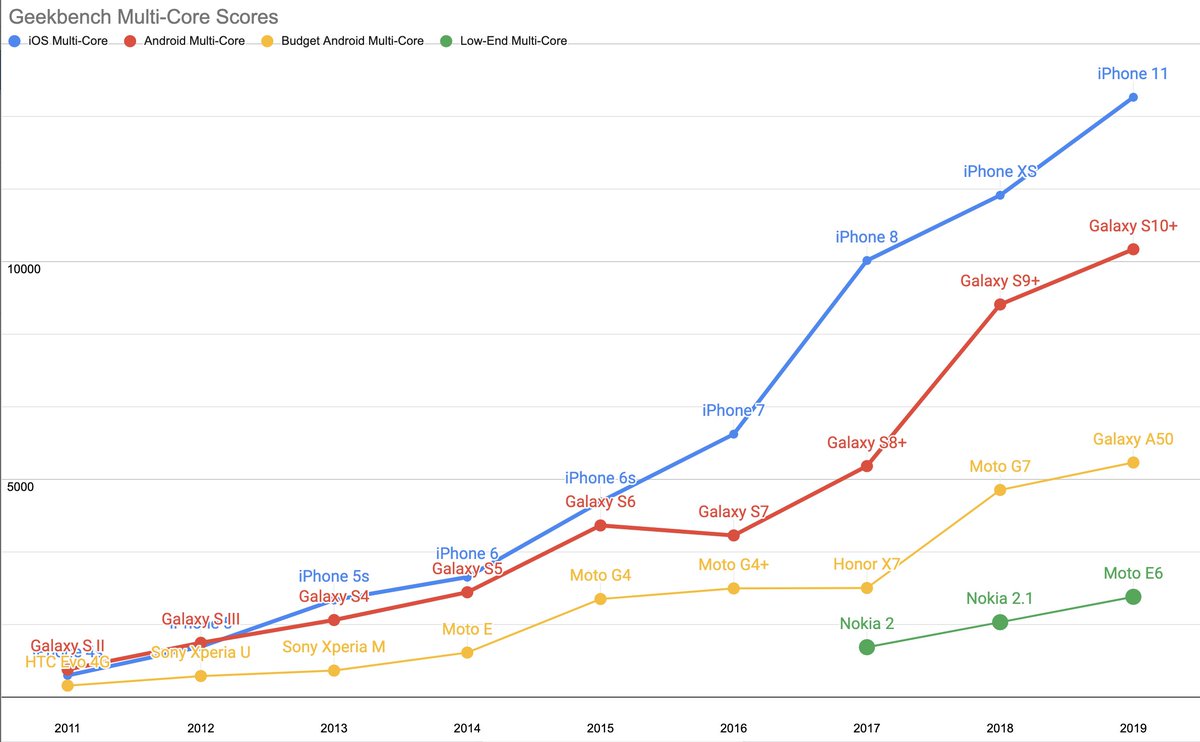

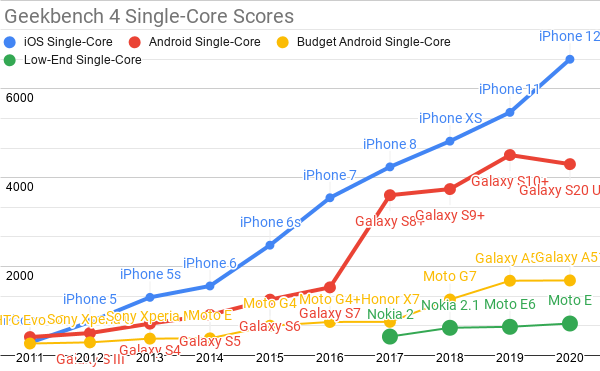

Updated Geekbench 4 single-core scores for each mobile price-point.

Recall that single-core performance most directly translates into speed on the web. Android-ecosystem SoC performance is, in a word, disastrous.

How bad is it?

We can think about each category in terms of years behind contemporary iPhone releases:

- 2020's high-end Androids sport the single-core performance of an iPhone 8, a phone released in Q3'17

- mid-priced Androids were slightly faster than 2014's iPhone 6

- low-end Androids have finally caught up to the iPhone 5 from 2012

You're reading that right: single core Android performance at the low end is both shockingly bad and dispiritingly stagnant.

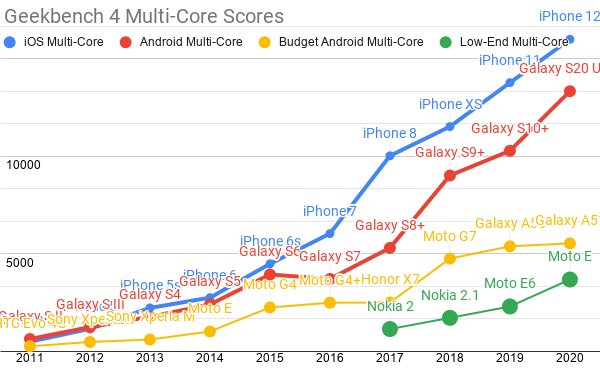

Android ecosystem SoC's fare slightly better on multi-core performance, but the Performance Inequality Gap is growing there, too.

The multi-core performance shows the same basic story: iOS's high-end and the most expensive Androids are pulling away from the volume-shipment pack. The fastest Androids predictably remain 18-24 months behind, owing to cheapskate choices about cache sizing by Qualcomm, Samsung Semiconductor, MediaTek, and the rest. Don't pay a lot for an Android-shaped muffler.

Chip design choices and silicon economics are the defining feature of the still-growing Performance Inequality Gap.

Things continue to get better and better for the wealthy, leaving the rest behind. When we construct a digital world to the limits of the best devices, the worse an experience we build, on average, for those who cannot afford iPhones or $800 Samsung flagships.

It is perhaps predictable that, instead of presenting a bulwark against stratification, technology outcomes have tracked society's growing inequality. A yawning chasm of disparities is playing out in our phones at the same time it has come to shape our economic and political lives. It's hard to escape thinking they're connected.

Developers, particularly in Silicon Valley firms, are definitionally wealthy and enfranchised by world-historical standards. Like upper classes of yore, comfort ("DX") comes with courtiers happy to declare how important comfort must surely be. It's bunk, or at least most of it is.

As frontenders, our task is to make services that work well for all, not just the wealthy. If improvements in our tools or our comfort actually deliver improvements in that direction, so much the better. But we must never forget that measurable improvement for users is the yardstick.

Instead of measurement, we seem to suffer a proliferation of postulates about how each new increment of comfort must surely result in better user experiences. But the postulates are not tied to robust evidence. Instead, experience teaches that it's the process of taking care to attend to those least-well-off that changes outcomes. Trickle-down user experience from developer-experience is, in 2021, as fully falsified as the Laffer Curve. There's no durable substitute for compassion.

A 2021 Global Baseline

It's in that spirit that I find it important to build to a strawperson baseline device and network target. Such a benchmark serves as a stand-in until one gets situated data about a site or service's users, from whom we can derive informed conclusions about user needs and appropriate resource budgets.

A global baseline is doubly important for generic frameworks and libraries, as they will be included in projects targeted at global-baseline users, whether or not developers have that in mind. Tools that cannot fit comfortably within, say, 10% of our baseline budget should be labelled as desktop-only or replaced in our toolchains. Many popular tools over the past 5 years have been blithely punctured these limits relative to the 2017 baseline, and the results have been predictably poor.

Given all the data we've slogged through, we can assemble a sketch of the browsers, devices, and networks today's P50 and P75 users will access sites from. Keep in mind that this baseline might be too optimistic, depending on your target market. Trust but verify.

OpenSignal's global report on connection speeds (pdf) suggest that WebPageTest's default 4G configuration (9Mbps w/ 170ms RTT) is a reasonable stand-in for the P75 network link. WPT's LTE configuration (12Mbps w/ 70ms RTT) may actually be too conservative for a P50 estimate today; something closer to 25Mbps seems to approach the worldwide median today. Network progress has been astonishing, particularly channel capacity (bandwidth). Sadly, data on latency is harder to get, even from Google's perch, so progress there is somewhat more difficult to judge.

As for devices, the P75 situation remains dire and basically unchanged from the baseline I suggested more than five years ago. Slow A53 cores — a design first introduced nearly a decade ago — continue to dominate the landscape, differing only slightly in frequency scaling (through improved process nodes) and GPU pairing. The good news is that this will change rapidly in the next few years. The median new device is already benefiting; modern designs and fab processes are powering a ramp-up in performance for a $300USD device, particularly in multi-core workloads. But the hardware future is not evenly distributed, and web workloads aren't heavily parallel.

Astonishingly, I believe this means that for at least the next year we should consider the venerable Moto G4 to still be our baseline. If you can't buy one new, the 2020-vintage Moto E6 does a stirring rendition of the classic; it's basically the same phone, after all. The Moto E7 Plus is a preview of better days to come, and they can't arrive soon enough.

"All models are wrong, but some are useful."

Putting it all together, where does that leave us? Back-of-the napkin, and accepting our previous targets of 5 seconds for first load and two seconds for second load, what can we afford?

Plugging the new P75 numbers in, the impact of network improvements are dramatic. Initial connection setup times are cut in half, dropping from 1600ms to 700ms, which frees up significant headroom to transmit more data in the same time window. The channel capacity increase to 9Mbps is enough to transmit more than 4MiB (megabytes) of content over a single connection in our 5 second window (1 Byte == 8 bits, so 9Mbps is just over ~1MBps). Of course, if most of this content is JavaScript, it must be compiled and run, shrinking our content window back down significantly. Using a single connection (thanks to the magic of HTTP/2), a site composed primarily of JavaScript loaded perfectly can, in 2021, weigh nearly 600KiB and still hit the first-load bar.

That's a very fine point to balance on, though. A single additional TCP/TLS connection setup in the critical path reduces the amount by 100KiB (as do subsequent critical-path network handshakes). Serialized requests for data, high TTFBs, and ever-present font serving issues make these upper limits far higher than what a site shooting for consistently good load times should be aiming for. In practice, you can't actually afford 600KiB of content if your application is build in the increasingly popular "single page app" style.

As networks have improved, client-side CPU time now dominates script download, adding further variability. Not all script parses and runs in the same amount of time for the same size. The contribution of connection setup also looms large.

If you want to play with the factors and see how they contribute, you can try this slightly updated version of 2017's calculator (launch a larger version in a new tab here):

This model of browser behaviour, network impacts, and device processing time is, of course, wrong for your site. It could be wrong in ways big and small. Perhaps your content is purely HTML, CSS, and images which would allow for a much higher budget. Or perhaps your JS isn't visually critical path, but still creates long delays because of delayed fetching. And nobody, realistically, can predict how much main-thread work a given amount of JS will cause. So it's an educated guess with some padding built in to account for worst-case content-construction (which is more common than you or I would like to believe).

Conservatively then, assuming at least 2 connections need to be set up (burning ~1400 of our 5000ms), and that script resources are in the critical path, the new global baseline leaves space for ~100KiB (gzipped) of HTML/CSS/fonts and 300-350KiB of JavaScript on the wire (compressed). Critical path images for LCP, if any, need to subtract their transfer size from one of these buckets, with trades against JS bundle size potentially increasing total download budget, but the details matter a great deal. For "modern" pages, half a megabyte is a decent hard budget.

Is that a lot? Well, 2-4x our previous budget, and as low-end CPUs finally begin to get faster over the next few years, we can expect it to loosen further for well-served content.

Sadly, most sites aren't perfectly served or structured, and most mobile web pages send more than this amount of JS. But there's light at the end of the tunnel: if we can just hold back the growth of JS payloads for another year or two — or reverse the trend slightly — we might achieve a usable web for the majority of the world's users by the middle of the decade.

Getting there involves no small amount of class traitorship; the frontend community will need to value the commons over personal comfort for a little while longer to ease our ecosystem back toward health. The past 6 years of consulting with partner teams has felt like a dark remake of Groundhog Day, with a constant parade of sites failing from the get-go thanks to Framework + Bundler + SPA architectures that are mismatched to the tasks and markets at hand. Time (and chips) can heal these wounds if we only let it. We only need to hold the line on script bloat for a few years for devices and networks to overtake the extreme, unconscionable excesses of the 2010's.

Baselines And Budgets Remain Vital

I mentioned near the start of this too-long piece that metrics better than Time-to-Interactive have been developed since 2017. The professionalism with which the new Core Web Vitals (CWV) have been developed, tested, and iterated on inspires real confidence. CWV's constituent metrics and limits work to capture important aspects of page experiences as users perceive them on real devices and networks.

As RUM (field) metrics rather than bench tests, they represent ground truth for a site, and are reported in aggregate by the Chrome User Experience Report (CrUX) pipeline. Getting user-population level data about the performance of sites without custom tooling is a 2016-era dream come true. Best of all, the metrics and limits can continue to evolve in the future as we gain confidence that we can accurately measure other meaningful components of a user's journey.

These field metrics, however valuable, don't yet give us enough information to guide global first-best-guess making when developing new sites. Continuing to set performance budgets is necessary for teams in development, not least of all because conformance can be automated in CI/CD flows. CrUX data collection and first-party RUM analytics of these metrics require live traffic, meaning results can be predicted but only verified once deployed.

Both budgets and RUM analytics will continue to be powerful tools in piercing the high-performance privilege bubble that surrounds today's frontend discourse. Grounding our choices against a baseline and measuring how it's going for real users are the only proven approaches I know that reliably help teams take the first step toward frontend's first and highest calling: a web that's truly for everyone.

One subtle effect of browser attempts to avoid showing users blank white screens is that optimisations meant to hide latency may lead users to misattribute which sites are slow.

Consider a user tapping a link away from your site to a slow, cross-origin site. If the server takes a long time to respond, the referring page will be displayed for as long as it takes for the destination server to return with minimally parseable HTML content. Similarly, if a user navigates to your site, but the previous site's

onunloadhandlers take a long time to execute (an all too common issue), no matter how fast your site responds, the poor performance of the referring page will make your site appear to load slowly.Even without hysteresis effects, the performance of referrers and destinations can hurt your brand. ⇐

A constant challenge is the tech world's blindness to devices carried by most users. Tech press coverage is over-fitted to the high-end phones sent for review (to say nothing of the Apple marketing juggernaut), which doesn't track with shipment volumes.

Smartphone markers (who are often advertisers) want attention for the high-end segment because it's where the profits are, and the tech press rarely pushes back. Column inches covering the high-end remains heavily out of proportion to sales by price-point. In a world with better balance, most articles would be dedicated to announcements of mid-range devices from LG and Samsung.

International tech coverage would reflect that, while Apple finally pierced 50% US share for the first time in a decade at the end of 2020, iOS smartphone shipments consistently max out at ~20% worldwide, toiling near 15% most quarters.

There's infinitely more to say about these biases and how they manifest. It's constantly grating that Qualcomm, Samsung Semiconductor, MediaTek, and other Android SoC vendors get a pass (even at the high end) on their terrible price/performance.7:1

Were we to suffer an outbreak of tenacious journalism in our tech press, coverage of the devastatingly bad CPUs or the galling OS update rates amongst Android OEMs might be among the early signs. ⇐

In the worst cases, off-thread compilation has no positive impact on client-side rendered SPAs. If an experience is blocked on a script to begin requesting data or generating markup from it, additional cores can't help. Some pages can be repaired with a sprinkling of

<link rel=preload>directives.More frequently, "app"-centric architectures promulgated by popular framework starter kits leave teams with immense piles of JS to whittle down. This is expensive, gruelling work in the Webpack mines; not all the "developer experience" folks signed up for.

The React community, in particular, has been poorly served by a lack of guidance, tooling, and support from Facebook. Given the scale of the disaster that has unfolded there, it's shocking that in 2021 (and after recent re-development of the documentation site) there remains no guidance about bundle sizes or performance budgets in React's performance documentation.

Facebook, meanwhile, continues to staff a performance team, sets metrics and benchmarks, and generally exhibits the sort of discipline about bundle sizes and site performance that allows teams to succeed with any stack. Enforced latency and performance budgets tend to have that effect.

FB, in short, knows better. ⇐

As ever, take the money and introductions VCs offer, but interpret their musings about what "everyone knows" and "how things are" like you might Henry Kissinger's geopolitical advise: toxic, troubling — and above all — unfailingly self-serving. ⇐

Device Average Selling Price (APSs) are both directionally helpful and deeply misleading.

Global ASPs suffer all the usual issues of averages. A slight increase or decrease in the global ASP can mask extreme variance between geographies, major disparities in volumes, and shifts in prices at different deciles.

We see this misfeature in the wild through press treatment of large headline increases in ASP and iOS share when Android device sales fall even slightly. Instead of digging into the shape of the distribution and relative time series inputs, the press regularly writes this up as the sudden success of some new Apple feature. That's always possible, but a slightly stylised version of these facts more easily pass Occam's test: wealthy users are less sensitive in their technology refresh timing decisions.

This makes intutive sense: in a recession, replacing consumer electronics is a relative luxury, leading the most price-sensitive buyers — overwhelmingly Android users — to push off replacing otherwise functional devices.

Voila! A slight dip in the overwhelming volume of Android purchases appears to bolster both ASP and iOS share since iOS devices are almost universally more expensive. ASP might be a fine diagnostic metric in limited cases, but it always pays to develop a sense for the texture of your data! ⇐

While the model of median device representation presented here is hand-wavey, I've cross-referenced the results with Google's internal analysis and the model holds up OK. Native app and SDK developers with global reach can use RAM, CPU speeds, and screen DPI to bucket devices into high/medium/low tiers, and RAM has historically been a key signifier of device performance overall. Indeed, some large sites I've worked with have built device model databases to bucket traffic based on system RAM to decide which versions/features to serve.

Our ASP + age model could be confounded by many factors: ASPs deviating more than expected year-on-year, non-linearities in price/performance (e.g., due to a global chip shortage), or sudden changes in device replacement rates. Assuming these factors hold roughly steady, so will the model's utility. Always gut-check the results of a model! Caveat emptor.

In our relatively steady-state, 90+% of active smartphones were sold in the last 4 years. This makes some intuitive sense: batteries degrade, screens crack, and disks fill up. Replacement happens.

If our napkin-back modelling has any bias, it's slightly too generous about device prices (too high) and age (too young) given the outsized historical influence of wealthier markets achieving smartphone saturation ahead of emerging one. Given that a new baseline is being set in an environment where we know the bottom-end will start to rise in terms of performance over the next few years (instead of staying stagnant), this bias, while hard to quantify, doesn't seem deeply problematic. ⇐

While process nodes are starting to move forward in a hopeful way, the overall design tradeoffs of major Android-ecosystem SoC vendors are not. Recently updated designs for budget parts finally get out-of-order dispatch and better parallelism.

Sadly, the feeble caches they're mated to ensure whatever gains are unlocked by better microarchitectures, plus higher frequencies, go largely to waste. It's not much good to clock a core faster or give it more ability to dispatch ops in if it's only spinning up to a high power state to stall on main memory, 100s of cycles away.

Apple consistently wins benchmarks one both perf and power by throwing die space at the ARBs and caches to feed their hungry, hungry cores (among other improvements to core design). Any vendor with an ARM Architectural License can play this game, but either Qualcomm et al. are too stingy to fork out $200K (or $800K?) for a full license to customise the core of the thing they market, or they don't want to spend more to fund the extra design time or increase mm^2 of die space (both of which might cut into margins).

Whatever the reason, these designs are (literally) hot garbage, dollar-for-dollar, compared to the price/performance of equivalent-generation Apple designs. It's a trickle-down digital divide, on a loop. The folks who suffer are end-users who can't access services and information they need quickly because developers in the privilege bubble remain insulated by wealth from all of these effects, oblivious to the ways that a market failure several layers down the stack keeps them ignorant of their malign impacts on users. ⇐ ⇐

Election Season 2020, W3C TAG Edition

Update: Jeffery's own post discussing his run is now up and I recommend reading it if you're in a position to cast a vote in the election.

Two years ago, as I announced that I wouldn't be standing for a fourth term on the W3C's Technical Architecture Group, I wrote that:

Having achieved much of what I hoped when running for the TAG six years ago, it's time for fresh perspectives.

There was no better replacement I could hope for than Alice Boxhall. The W3C membership agreed and elected her to a two year term. Her independence, technical rigour, and dedication to putting users and their needs first have served the TAG and the broader web community exceedingly well since. Sadly, she is not seeking re-election. The TAG will be poorer without her dedication, no-nonsense approach, and depth of knowledge.

TAG service is difficult, time consuming, and requires incredible focus to engage constructively in the controversies the TAG is requested to provide guidance about. The technical breadth required creates a sort of whiplash effect as TAG members consider low-level semantics and high-level design issues across nearly the entire web platform. That anyone serves more than one term is a minor miracle!

While I'm sad Alice isn't running again, my colleague Jeffrey Yasskin has thrown his hat into the ring in one of the most contested TAG elections of recent memory. There aren't many folks who have the temperament, experience, and track record that would make them natural TAG candidates, but Jeffrey is in that rare group and I hope that I can convince you he deserves you organisation's first-choice vote.

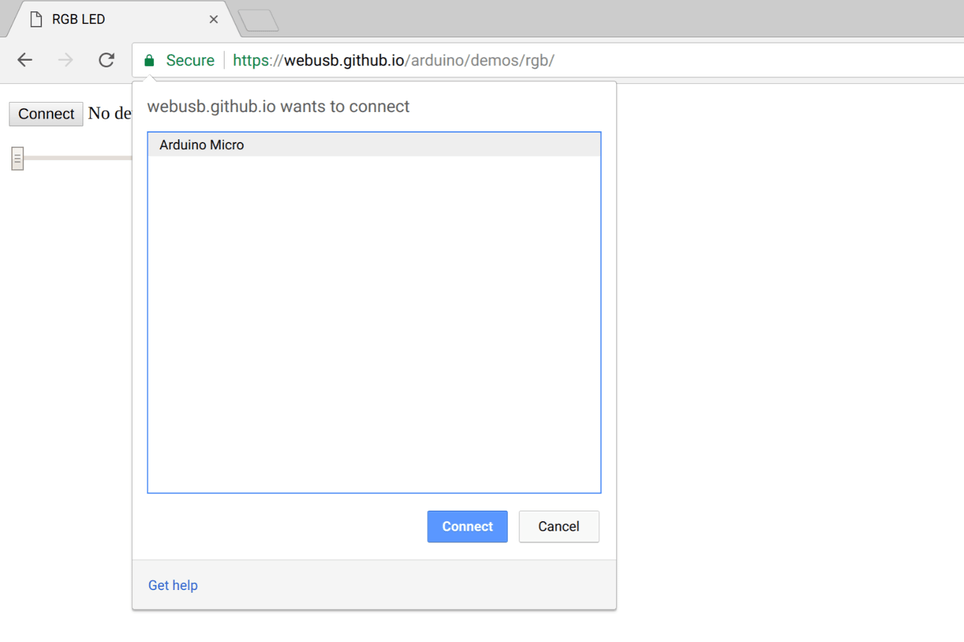

Jeffrey and I have worked closely over the past 5 years on challenging projects that have pushed the boundaries of the web's potential forward, but which also posed large risks if done poorly. It was Jeff's involvement that lead to the "chooser model" for several device access APIs, starting with Web Bluetooth.

This model has since been pressed into service multiple times, notably for Chrome's mediation of the Web USB, Web HID, and Web Serial APIs. The introduction of a novel style of chooser solved thorny problems.

Previously, security and feature teams were unable to find a compromise that provided sufficient transparency and control while mitigating abuses inherent in blanket prompts. Jeffrey's innovation created space for compromise and, when combined with other abuse mitigations he helped develop for Bluetooth, was enough to surmount the objections levelled against proposals for (over)broad grants.

Choosers have unlocked educational programming without heavyweight tools, sparked a renaissance in utilities that work everywhere, and is contributing to a reduction in downloads of potentially insecure native programs for controlling IoT devices.

There's so much more to talk about in Jeffrey's background and approach to facilitating structural solutions to thorny problems — from his work to (finally) develop a grounded privacy threat model as the TAG repeatedly requested, to his stewardship of the Web Packaging work the TAG started in 2013 to his deep experience in standards at nearly every level of the web stack — but this post is already too long.

There are a lot of great candidates running for this year's four open seats on the TAG, and while I can imagine many of them serving admirably, not least of all Tess O'Connor and Sanghwan Moon who have been doing great work, there's nobody I'd rather see earn your first-choice vote than Jeffrey Yasskin. He's not one to seek the spotlight for himself, but having worked closely with him, I can assure you that, if elected, he'll earn your trust the way he has earned mine.

Resize-Resilient `content-visibility` Fixes

Update: After hitting a bug related to initial rendering on Android, I've updated the code here and in the snippet to be resilient to browsers deciding (wrongly) to skip rendering the first <article> in the document.

Update, The Second: After holiday explorations, it turns out that one can, indeed, use contain-intrinsic-size on elements that aren't laid out yet. Contra previous advice, you absolutely should do that, even if you don't know natural widths yet. Assuming the browser will calculate a width for an <article> once finally laid out, there's no harm in reserving a narrow but tall place-holder. Code below has been updated to reflect this.

The last post on avoiding rendering work for content out of the viewport with content-visibility included a partial solution for how to prevent jumpy scrollbars. This approach had a few drawbacks:

- Because

content-visibility: visiblewas never removed, layouts inevitably grew slower as more and more content was unfurled by scrolling. - Layouts caused by resize can be particularly slow on large documents as they can cause all text flows to need recalculation. This would not be improved by the previous solution if many articles had been marked

visible. - Jumping "far" into a document via link or quick scroll could still be jittery.

Much of this was pointed out (if obliquely) in a PR to the ECMAScript spec. One alternative is a "place-keeper" element that grows with rendered content to keep elements that eventually disappear from perturbing scroll position. This was conceptually hacky and I couldn't get it working well.

What should happen if an element in the flow changes its width or height in reaction to the browser lazy-loading content? And what if the scroll direction is up, rather than down?

For a mercifully brief while I also played with absolutely positioning elements on reveal and moving them later. This also smelled, but it got me playing with ResizeObservers. After sleeping on the problem, a better answer presented itself: leave the flow alone and use IntersectionObservers and ResizeObservers to reserve vertical space using CSS's new contain-intrinsic-size property once elements have been laid out.

I'd dismissed contain-intrinsic-size for use in the base stylesheet because it's impossible to know widths and heights to reserve. IntersectionObservers and ResizeObservers, however, guarantee that we can know the bounding boxes of the elements cheaply (post layout) and use the sizing info they provide to reserve space should the system decide to stop laying out elements (setting their height to 0) when they leave the viewport. We can still use contain-intrinsic-size, however, to reserve a conservative "place-holder" for a few purposes:

- If our element would be content sized, we can give it a narrow

intrinsic-sizewidth; the browser will still give us the natural width upon first layout. - Reserving some height for each element creates space for

content-visibilitys internal Intersection Observer to interact with. If we don't provide a height (in this case,500pxas a guess), fast scrolls will encounter "bunched" elements upon hitting the bottom, andcontent-visiblity: autowill respond by laying out all of the elements at once, which isn't what we want. Creating space means we are more likely to lay them out more granularly.

The snippet below works around a Chrome bug with applying content-visibility: auto; to all <articles>, forcing initial paint of the first, then allowing it to later be elided. Observers update sizes in reaction to layouts or browser resizing, updating place-holder sizes while allowing the natural flow to be used. Best of all, it acts a progressive enhancement:

<!-- Basic document structure -->

<html>

<head>

<style>

/* Workaround for Chrome bug, part 1

*

* Chunk rendering for all but the first article.

*

* Eventually, this selector will just be:

*

* body > main > article

*

*/

body > main > article + article {

content-visibility: auto;

/* Add vertical space for each "phantom" */

contain-intrinsic-size: 10px 500px;

}

</style>

<head>

<body>

<!-- header elements -->

<main>

<article><!-- ... --></article>

<article><!-- ... --></article>

<article><!-- ... --></article>

<!-- ... --><!-- Inline, at the bottom of the document -->

<script type="module">

let eqIsh = (a, b, fuzz=2) => {

return (Math.abs(a - b) <= fuzz);

};

let rectNotEQ = (a, b) => {

return (!eqIsh(a.width, b.width) ||

!eqIsh(a.height, b.height));

};

// Keep a map of elements and the dimensions of

// their place-holders, re-setting the element's

// intrinsic size when we get updated measurements

// from observers.

let spaced = new WeakMap();

// Only call this when known cheap, post layout

let reserveSpace = (el, rect=el.getClientBoundingRect()) => {

let old = spaced.get(el);

// Set intrinsic size to prevent jumping on un-painting:

// https://drafts.csswg.org/css-sizing-4/#intrinsic-size-override

if (!old || rectNotEQ(old, rect)) {

spaced.set(el, rect);

el.attributeStyleMap.set(

"contain-intrinsic-size",

`${rect.width}px ${rect.height}px`

);

}

};

let iObs = new IntersectionObserver(

(entries, o) => {

entries.forEach((entry) => {

// We don't care if the element is intersecting or

// has been laid out as our page structure ensures

// they'll get the right width.

reserveSpace(entry.target,

entry.boundingClientRect);

});

},

{ rootMargin: "500px 0px 500px 0px" }

);

let rObs = new ResizeObserver(

(entries, o) => {

entries.forEach((entry) => {

reserveSpace(entry.target, entry.contentRect);

});

}

);

let articles =

document.querySelectorAll("body > main > article");

articles.forEach((el) => {

iObs.observe(el);

rObs.observe(el);

});

// Workaround for Chrome bug, part 2.

//

// Re-enable browser management of rendering for the

// first article after the first paint. Double-rAF

// to ensure we get called after a layout.

requestAnimationFrame(() => {

requestAnimationFrame(() => {

articles[0].attributeStyleMap.set(

"content-visibility",

"auto"

);

});

});

</script>This solves most of our previous problems:

- All articles can now take

content-visibility: auto, removing the need to continually lay out and render the first article when off-screen. content-visibilityfunctions as a hint, enhancing the page's performance (where supported) without taking responsibility for layout or positioning.- Elements grow and shrink in response to content changes

- Presuming browsers do a good job of scroll anchoring, this solution is robust to both upward and downward scrolls, plus resizing.

Debugging this wouldn't have been possible without help from Vladimir Levin and Una Kravets whose article has been an indispensable reference, laying out the pieces for me to eventually (too-slowly) cobble into a full solution.

`content-visiblity` Without Jittery Scrollbars

Update: After further investigation, an even better solution has presented itself, which is documented in the next post.

The new content-visibility CSS property finally allows browsers to intelligently decide to defer layout and rendering work for content that isn't on-screen. For pages with large DOMs, this can be transformative.

In applications that might otherwise be tempted to adopt large piles of JS to manage viewports, it can be the difference between SPA monoliths and small progressive enhancements to HTML.

One challenge with naive application of content-visibility, though, is the way that it removes elements from the rendered tree once they leave the viewport -- particularly as you scroll downward. If the scroll position depends on elements above the currently viewable content "accordion scrollbars" can dance gleefully as content-visibility: auto does its thing.

To combat this effect, I've added some CSS to this site that optimistically renders the first article on a page but defers others. Given how long my articles are, it's a safe bet this won't usually trigger multiple renders for "above the fold" content on initial pageload (sorry not sorry).

This is coupled with an IntersectionObserver that to unfurls subsequesnt articles as you scroll down.

The snippets:

<style>

/* Defer rendering for the 2nd+ article */

body > main > *+* {

content-visibility: auto;

}

</style><script type="module">

let observer = new IntersectionObserver(

(entries, o) => {

entries.forEach((entry) => {

let el = entry.target;

// Not currently in intersection area.

if (entry.intersectionRatio == 0) {

return;

}

// Trigger rendering for elements within

// scroll area that haven't already been

// marked.

if (!el.markedVisible) {

el.attributeStyleMap.set(

"content-visibility",

"visible"

);

el.markedVisible = true;

}

});

},

// Set a rendering "skirt" 50px above

// and 100px below the main scroll area.

{ rootMargin: "50px 0px 100px 0px" }

);

let els =

document.querySelectorAll("body > main > *+*");

els.forEach((el) => { observer.observe(el); });

</script>With this fix in place content will continue to appear at the bottom (ala "infinite scrolling") as you scroll down, but never vanish from the top or preturb your scroll position in unnatural ways.

The Pursuit of Appiness

TL;DR: App stores exist to provide overpowered and easily abused native app platforms a halo of safety. Pre-publication gates were valuable when better answers weren't available, but commentators should update their priors to account for hardware and software progress of the past 13 years. Policies built for a different era don't make sense today, and we no longer need to accept sweeping restrictions in the name of safety. The idea that preventing browser innovation is pro-user is particularly risible, leading to entirely avoidable catch-22 scenarios for developers and users. If we're going to get to better outcomes from stores on OSes without store diversity or side-loading, it's worth re-grounding our understanding of stores.

Contemporary debates about app stores start from premises that could use review. This post reviews these from a technical perspective. For the business side, this post by Ben Thompson is useful context.

...Only Try To Realize The Truth: There Is No "App" #

OSes differ widely in the details of how "apps" are built and delivered. The differences point to a core truth: technically speaking, being "an app" is merely meeting a set of arbitrary and changeable OS conventions.

The closer one looks, the less a definition of "appiness" can be pinned down to specific technologies. Even "toy" apps with identical functionality are very different under the covers when built on each OSes preferred stack. Why are iOS apps "direct metal" binaries while Android apps use Java runtimes? Is one of these more (or less) "an app"? The lack of portability highlights the absence of clear technical underpinnings for what it means to "be an app".

To be sure, there are platonic technical ideals of applications as judged by any OS's framework team[1], but real-world needs and behaviour universally betray these ideals.[2] Native platforms always provide ways to dynamically load code and content from the network, both natively or via webview and choice of code platform is not dispositive in identifying if an experience is "an app".

The solid-land definition "an app" is best understood through UI requirements to communicate "appiness" to end-users: metadata to identify the publisher and provide app branding, plus bootstrap code to render initial screens. Store vendors might want to deliver the full user experience through the binary they vend from their stores, but that's not how things work in practice.

Industry commentators often write as though the transition to mobile rigidly aligned OSes with specific technology platforms. At a technical level, this is not correct.

Since day one, iOS has supported app affordances for websites with proprietary metadata, and Android has similar features. "Appiness" is an illusion, an arbitrary line drawn to include programs built on some platforms but not others, and all of today's OSes allow multiple platforms to expose "real apps."

Platforms, while plural, are not equal.

Apple could unveil iOS with support for web apps without the need for an app store because web applications are safe by default. Circa '07, those web apps were notably slower than native code could be. The world didn't yet have pervasive multi-process browser sandboxing, JS JITs, or metal-speed binary isolation. Their arrival, e.g. via WASM, has opened up entirely new classes of applications on a platform (the web) that demands memory safety and secure-by-default behavior as a legacy of 90s browser battles.

In '07, safety implied an unacceptable performance hit on slow single-core devices with 128MiB of RAM. Many applications couldn't be delivered if strict protection was required using the tools of the day. This challenging situation gave rise to app stores: OS vendors understood that fast-enough-for-games needed dangerous trade-offs. How could the bad Windows security experience be prevented? A priori restraint on publishing allowed vendors to set and enforce policy quickly.

Fast forward a decade, and both the software and hardware situations have changed dramatically. New low-end devices are 4-to-8 core, 2GHz systems with 2GiB of RAM. Sandboxing is now universal (tho quality may differ) and we collectively got savvy about running code quickly while retaining memory safety. The wisdom of runtime permission grants (the web's model) has come to be widely accepted.

For applications that need peak compute performance, the effective tax rate of excellent runtime security is now in the 5-10% range, rather than the order of magnitude cost a decade ago. Safety is within our budget, assuming platforms don't make exotic and dangerous APIs available to all programs — more on that in a second.

So that's apps: they're whatever the OS says they are, that definition can change at any moment, and both reasonably constrained and superpowered programs can "be apps." Portability is very much a possibility if only OSes deign to allow it[3].

What Is An "App Store" Technically? #

App stores, as we know them today, are a fusion of several functions:

- Security screens to prevent malign developer behavior on overpowered native platforms

- Discovery mechanisms to help users find content, e.g., search

- App distribution tools; i.e., a CDN for binaries

- Low-friction payment clearing houses

Because app stores rose to prominence on platforms that could not afford good safety using runtime guards, security is the core value proposition of a store. When working well — efficiently applying unobjectionable policy — store screening is invisible to everyone but developers. Other roles played by stores may have value to users but primarily serve to build a proprietary moat around commodity (or lightly differentiable) proprietary, vertically integrated platforms.

Amazon and search engines demonstrate that neither payment nor discovery requires stores. If users are aware of security in app stores, it's in the breach, e.g., the semi-occasional article noting how a lousy app got through. The desired effect of pre-screening is to ensure that everything discovered in the store is safe to try, despite being built on native platforms that dole out over-broad permissions. It's exceedingly difficult to convince folks to use devices heavily if apps can brick the device or drain ones bank account.[4]

The Stratechery piece linked at the top notes how important this is:

It is essential to note that this forward integration has had huge benefits for everyone involved. While Apple pretends like the Internet never existed as a distribution channel, the truth is it was a channel that wasn’t great for a lot of users: people were scared to install apps, convinced they would mess up their computers, get ripped off, or accidentally install a virus.

Benedict Evans adopts similar framing:

Specifically, Apple tried to solve three kinds of problem.

Putting apps in a sandbox, where they can only do things that Apple allows and cannot ask (or persuade, or trick) the user for permission to do ‘dangerous’ things, means that apps become completely safe. A horoscope app can’t break your computer, or silt it up, or run your battery down, or watch your web browser and steal your bank details.

An app store is a much better way to distribute software. Users don’t have to mess around with installers and file management to put a program onto their computer - they just press ‘Get’. If you (or your customers) were technical this didn’t seem like a problem, but for everyone else with 15 copies of the installer in their download folder, baffled at what to do next, this was a huge step forward.

Asking for a credit card to buy an app online created both a friction barrier and a safety barrier - ‘can I trust this company with my card?’ Apple added frictionless, safe payment.

These takes highlight the value of safety but skip right past the late desktop experience: we didn't primarily achieve security on Windows by erecting software distribution choke points. Instead, we got protection by deciding not to download (unsafe) apps, moving computing to a safe-by-default platform: the web.[5]

In contrast with the web, native OSes have historically blessed all programs with far too much ambient authority. They offer over-broad access to predictably terrifying misfeatures (per-device identifiers, long-running background code execution and location reporting, full access to contacts, the ability to read all SMS messages, pervasive access to clipboard state, etc.).

Native platform generosity with capabilities ensures that every native app is a vast ocean of abuse potential. Pervasive "SDK" misbehavior converts this potential to dollars. Data available to SDK developers make fears about web privacy look quaint. Because native apps are not portable or standards-based, the usual web-era solutions of switching browsers or installing an extension are unavailable. To accept any part of the native app ecosystem proposition is to buy the whole thing.

Don't like the consequences? The cost of changing one's mind is now starts at several hundred dollars. The moat of proprietary APIs and distribution is sold on utility and protection, but it's primary roles is protect margins. A lack of app and data interoperability prevents users from leaving. The web, meanwhile, extends security as far as the link can take you. Moats aren't necessities when security is assured.

This situation are improving glacially, often at the pace of hardware replacement.[6] The slog towards mobile OSes adopting a more web-ish contract with developers hints at the interests of OS vendors. Native platforms haven't reset the developer contract to require safety because they recall what happened to Windows. When the web got to parity in enough areas, interest in the proprietary platform receded to specialist niches (e.g., AAA gaming, CAD). Portable, safe apps returned massive benefits to users who no longer needed to LARP as systems administrators.

The web's safety transformed software distribution so thoroughly that many stopped thinking of applications as such. Nothing is as terrifying for a vertically integrated hardware vendor as the thought that developers might leave their walled garden, leading users to a lower-tax-rate jurisdiction.

Safety enables low friction, and the residual costs in data and acquisition of apps through stores indicate not security, but the inherent risk of the proprietary platforms they guard. Windows, Android, and ChromeOS allow web apps in their stores, so long as the apps conform to content policies. Today, developers can reach users of all these devices on their native app stores from a single codebase. Only one vendor prevents developers from meeting user needs with open technology.

This prejudice against open, unowned, safe alternatives exists to enable differentiated integrations. Why? To preserve the ability to charge much more over the device's life than differences in hardware quality might justify. The downsides now receiving scrutiny are a direct consequence of this strategic tax on users and developers. Restrictions, once justifiably required to maintain safety, are now antique. What developer would knowingly accept such a one-sided offer if made today?

Compound Disinterest #

Two critical facts about Apple's store policies bear repeating:

- Apple explicitly prevents web content within their store

- Apple prevents browser makers from shipping better engines in alternative iOS browsers[7]

It is uniquely perverse that these policies ensure the web on iOS can only ever be as competent, safe, and attractive to developers as Apple allows.

Were Apple producing a demonstrably modern and capable browser, it might not be a crisis, but Apple's browser and the engine they force others to use is years behind.

The continuing choice to under-invest in Safari and WebKit has an ecosystem-wide impact, despite iOS's modest reach. iOS developers who attempt to take Apple up on their generous offer to reach users through the web are directly harmed. The low rate of progress in Apple's browsers all but guarantees that any developer who tries will fail.

But that's not the biggest impact. Because the web's advantage is portability, web developers view features not available "everywhere" as entirely unavailable. The lowest common denominator sets a cap on developer ambitions, and Apple knows it. App Store policies taht restrict meaningful browser competition ensure the cap is set low so as to prevent real competition with Apple's proprietary tools and distribution channel.

Apple doesn't need a majority of web usage to come from browsers without critical features to keep capabilities from being perceived skeptically; they only need to keep them out of the hands of, say, 10% of users. So much the better if users who cannot perceive or experience the web delivering great experiences are wealthy developers and users. Money talks.

Thirteen years of disinterest in a competitive mobile web by Apple has produced a crisis for the web just as we are free of hardware shackles. The limits legitimated the architecture of app store control are gone, but the rules have not changed. The web was a lifeboat away from native apps for Windows XP users. That lifeboat won't come for iPhone owners because Apple won't allow it. Legitimately putting user's interests first is good marketing, but terrible for rent extraction.

Arguments against expanding web capabilities to reach minimum-mobile-viability sometimes come wrapped in pro-privacy language from the very parties that have flogged anti-privacy native app platforms[8]. These claims are suspect. Detractors (perhaps unintentionally) conflate wide and narrow grants of permission. The idea that a browser may grant some sites a capability is, of course, not equivalent to giving superpowers to all sites with low friction. Nobody's proposing to make native's mistakes.

Regardless, consistent misrepresentations are effective in stoking fear. Native apps are so dangerous they require app store gatekeepers, after all. Without looking closely at the details, it's easy to believe that expansions of web capability to be similarly broad. Thoughtful, limited expansions of heavily cabined capabilities take time and effort to explain. The nuances of careful security design are common casualties in FUD-slinging fights.

A cynic might suggest that these misrepresentations deflect questions about the shocking foreclosure of opportunities for the web that Apple has engineered — and benefits from directly.

Apple's defenders offer contradictory arguments:

- Browsers are essential to modern operating systems, and so iOS includes a good browser. To remain "a good browser," it continually adds and markets new features.

- Browsers are wildly unsafe because they load untrusted code and, therefore, they must use only Apple's (safe?) engine.[9]

- App stores ensure superpowered apps are trustworthy because Apple approves all the code.

- Apple doesn't allow web apps into the store as they might change at runtime to subvert policy.

Taken in isolation, the last two present a simple, coherent solution: ban browsers, including Safari. Also, webviews and other systems for dynamically pushing code to the client (e.g., React Native). No ads that aren't pre-packaged with the binary, thanks. No web content — you never can tell where bits if of a web page might be coming from, after all! This solution also aligns with current anti-web store policies.

The first two points are coherent if one ignores the last decade of software and hardware progress. They also tell the story of iOS's history: 13 years ago, smartphones were niche, and access to the web corpus was important. The web was a critical bridge in the era before native mobile-first development. Strong browser sandboxing was only then becoming A Thing (TM), and resource limits delayed mobile browsers from providing multi-process protection. OS rootings in the low-memory, 1-2 core-count era perhaps confirmed Cupertino's suspicions.[10]

Time, software, and hardware reality have all moved forward. The most recent weaksauce served to justify this aging policy has been the need for JIT in modern browsers. Browser vendors might be happy to live under JIT-less rules should it come to that — the tech is widely available — and yet alternative engines remain banned. Why?

Whatever the reason, in 2020, it isn't security.

Deadweight Losses #

Undoubtedly, web engines face elevated risks from untrusted, third-party content. Mitigating these risks has played a defining role in evolving browsers for more than twenty years.

Browsers compete on security, privacy, and other sensitive aspects of the user's experience. That competition has driven two decades of incredible security innovation without resorting to prior-restraint gatekeeping. The webkit-wrapper browsers on iOS are just as superpowered as other apps. They can easily subvert user intent and leak sensitive information (e.g., bank passwords). Trustworthiness is not down to Apple's engine restrictions; the success of secure browsers on every OS shows this to be the case. These browsers provide more power to users and developers while maintaining an even more aggressive security posture than any browser on iOS is allowed to implement.

But let's say the idea of "drive-by" web content accessing expanded capabilities unsettled Apple, so much so that they wanted to set a policy to prevent powerful features from being available to websites in mere tabs. Could they? Certainly. Apple's Add to Home Screen feature puts installed sites into little jails distinct from a user's primary browsing context. Apple could trivially enforce a policy that unlocked powerful features only upon completion of an installation ceremony, safe in the knowledge that they will not bleed out into "regular" web use.[11] We designed web APIs to accommodate a diversity of views about exposing features, and developers guard against variable support already.

Further, it does not take imagination to revoke features should they be abused by a site. Safari, like all recent-vintage browsers, includes an extensible, list-based abuse mitigation system.

The preclusion of better iOS browsers hinges on opacity. Control drives from developer policy rather than restrictions visible to users, rendering browser switching moot. Browser makers can either lend their brands to products they do not think of as "real browsers" or be cut off from the world's wealthiest users. No other OS forces this choice. But the drama is tucked behind the curtains, as is Apple's preference.

Most folks aren't technical enough to understand they can never leave Hotel Cupertino, and none of the alternative browsers they download even tell them they're trapped. A more capable, more competitive web might allow users to move away from native apps, leaving Apple without the ability to hold developers over the barrel. Who precludes this possibility? Apple, naturally.

A lack of real browser competition coupled with a trailing-edge engine creates deadweight losses, and these losses aren't constrained to the web. Apple's creative reliance on outdated policy explicitly favors the proprietary over the open, harming consumers along the way.

Suppose Apple wishes us to believe their app store policies fair, while simultaneously claiming the web is an outlet for categories of apps they do not wish to host in their store. In that case, our pundit class should at least query why Apple will (uniquely) neither allow nor build a credible, modern version of the web for iOS users.

The platonic ideal of "an app" is invariably built using the system's preferred UI toolkit and constructed to be self-contained. These apps tend to be bigger than toys, but smaller than what you might attempt as an end developer; think the system-provided calculator. ↩︎

Bits downloaded from the store seldom constrain app behavior, protests by the store's shopkeepers notwithstanding. Before the arrival of Android Dynamic Apps, many top apps used slightly-shady techniques to dynamically load JARs at runtime, bypassing DEX and signing restrictions. It's a testament to the prevalence of the need to compose experiences that this is now officially supported dynamically. ↩︎

Portability is a frequent trade-off in the capability/safety discussion. Java and Kotlin Android apps are safer and more portable by default than NDK binaries, which need to worry about endianness and other low-level system properties.

The price paid for portability is a hit to theoretical maximum performance. The same is true of the web vs. Java apps, tho in all cases, performance is contingent on the use-case and where platform engineers have poured engineer-years into optimisation. ↩︎

Not draining other's bank accounts may be something of an exercise to the reader. ↩︎

Skipping over the web and its success is a curious blind-spot for such thoughtful tech observers. Depressingly, it may signal that the web has been so thoroughly excluded from the land of the relevant by Apple that it isn't top, middle, or even bottom-of-mind. ↩︎

Worldwide sales of iOS and the high-end Android devices that get yearly OS updates are less than a third of total global handset volume. Reading this on an iPhone? Congrats! You're a global 15%-er. ↩︎

Section 2.5.6 is the "Hotel Cupertino" clause: you can pick any browser you choose, but you can never leave Apple's system-provided WebKit. ↩︎

Mozilla's current objections are, by contrast, confused to my ears but they are at least not transparently disingenuous. ↩︎

Following this argument, Apple is justified in (uniquely) restricting the ability of other vendors to deliver more features to the web (even though adding new features is part of what it means to be "a good browser"). Perversely, this is also meant to justify restrictions that improve the security of both new and existing features in ways that might make Safari look bad. I wonder what year it will be when Safari supports Cross-Origin Isolation or Site Isolation on any OS?

Why does it matter? Presumably, iOS will disallow WASM threading until that far-off day. ↩︎

For all of Apple's fears about web content and Safari's security track record, Apple's restrictions on competing web engines mean that nobody else has been allowed to bring stronger sandboxing tech to the iOS party.

Chrome, for one, would very much like to improve this situation the way it has on MacOS but is not allowed to. It's unclear how this helps Apple's users. ↩︎

webviews can plumb arbitrary native capabilities into web content they host, providing isolated-storage with expanded powers. The presence of webview-powered Cordova apps in Apple's App Store raises many questions in a world where Apple neither expands Safari's capabilities to meet web developer needs nor allows other browsers to do so in their stead. ↩︎